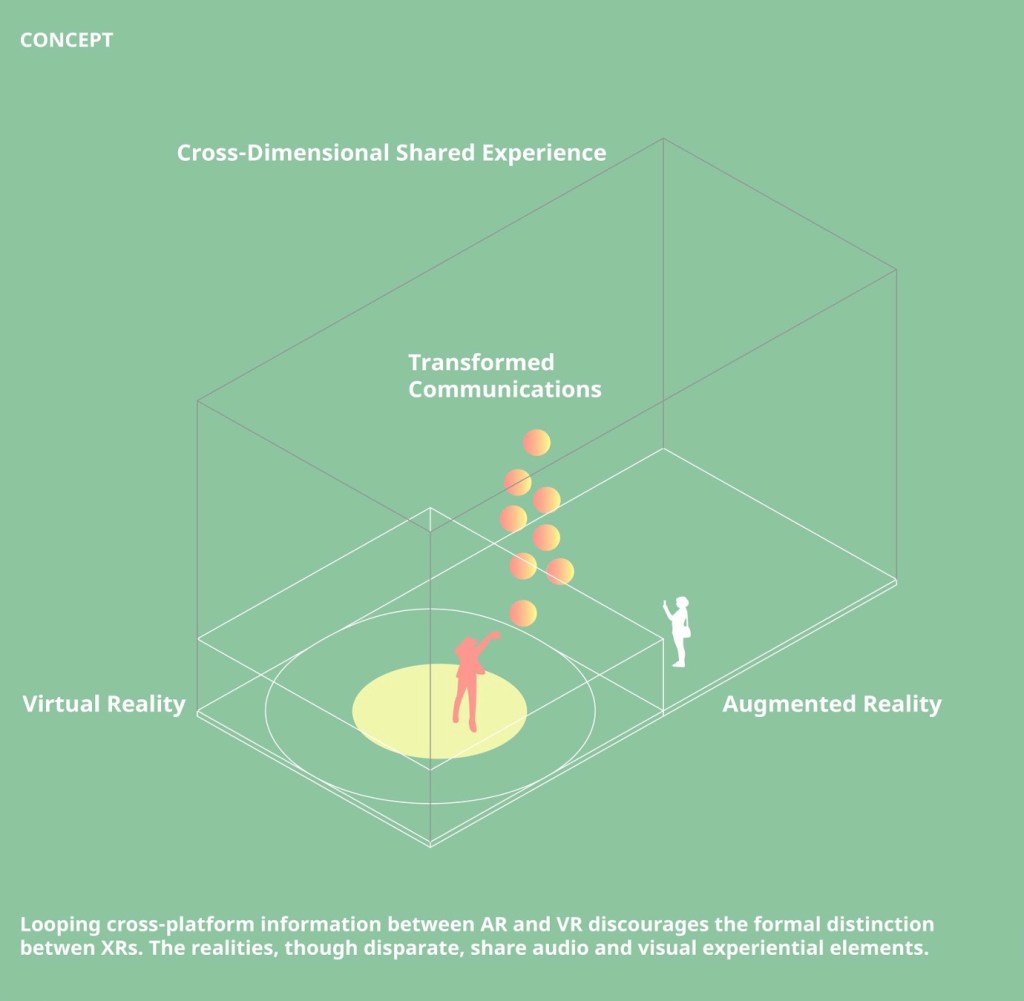

Bird Box is a multiparticipant AR/VR experience in which one person paints in a VR world, the other person paints in AR-overlaid reality, and both people see transformed versions of each other’s art appearing real-time in their own respective spaces.

The project was developed at the Reality Virtually Hackathon at the MIT Media Lab, January 17 – 21, 2019, where it was the winner of the Best in Art, Media & Entertainment Award.

As humans, we each inhabit our own unique reality. We can never experience the rich synaptic context of thoughts, memories, and emotions that define another person’s perception of the world. Therefore, even though we often feel or assume that we are communicating our ideas to others with complete clarity, this is almost never true. There will always be transformations and noise in the signal.

Bird Box is a metaphor for all the layers of richness that are lost in the communication process. The title is a nod to the homonymous Netflix film, in which – upon viewing the same unknown entity – some people experience enlightenment while others encounter their worst fears.

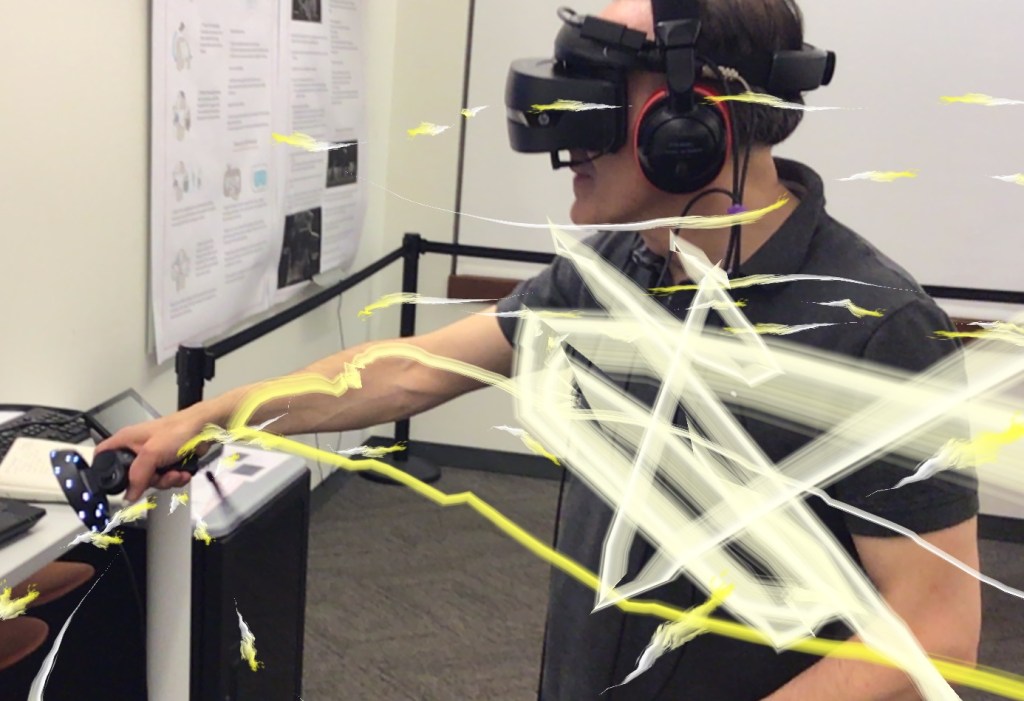

Participants in Bird Box share an audiovisual space in which their experience of each other’s actions is largely similar, yet irreconcilably different. The participant in VR is enclosed in a soundcage, creating music with their motions as they paint in the canvas of a surreal virtual world. The participant in AR, who can digitally paint in the real world, can hear the VR participant’s music but can never share the act of composing. The paint strokes created by each participant in their respective realities appear as traces in the other participant’s world.

In the course of design, we pivoted the original concept many times. At first, the project involved transforming a room into a spatial instrument in which two people – one in VR, blind to the environment, and one in AR, unable to trigger the notes – must work collaboratively in order to create beautiful sounds. We found ourselves repeatedly drawn toward the delicate tension that is created between two people who cannot see eye to eye. Thus, we shifted to focus around the imperfect act of communication as a visually creative, interactive experience.

The concept art and the score were created for this project during this hackathon. We pulled some free 3D models from Google Poly and TurboSquid. A few sound effects for the demo video were pulled from freesound.org. Other than freemium assets pulled in from the web, every part of this project was created during this hackathon.

Creative team

Alessio Grancini (concept, sound design, and Unity programming), Runze Zhang (AR/VR networking infrastructure and Unity programming), Emily Shoemaker (experience design), Kelon Cen (concept and 3D scene design), and David Tamés (interface design and Unity programming)

Built with

Logic Pro, Maya, Photoshop, Rhino, Unity, ARCore, ARFoundation, OpenVR, VIU, and Photon.